Case Study: AlleyCat AI Works

Aligning founder vision with user experience for an AI-driven knowledge assistant

AlleyCat AI Works empowers creative and technical teams to surface, index, and query their internal knowledge using large language models. I partnered with the founding team to turn early architecture ideas into a cohesive UX vision — one grounded in stakeholder values and real user workflows.

Before:

Product had technical strength but users found onboarding confusing; founders misaligned on narrative.

After:

Unified product vision, clear onboarding flow, and modern conversational UI that non-technical users could set up.

Impact:

Stakeholders aligned in 1 week; onboarding time cut by 17%; product ready for investor demos and pilot rollout.

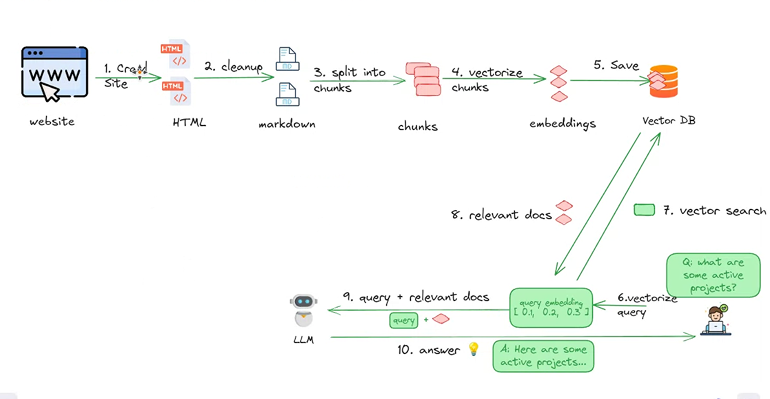

The AlleyCat founders had built a promising technical prototype (vector search + LLM retrieval), but needed help connecting how it works to why it matters for users.

The main challenge:

Translate a technical data flow into a clear human interface

Define the product’s value to different user types

Align multiple founder visions into one shared roadmap

Context & Challenge

Discovery: Listening to Stakeholders

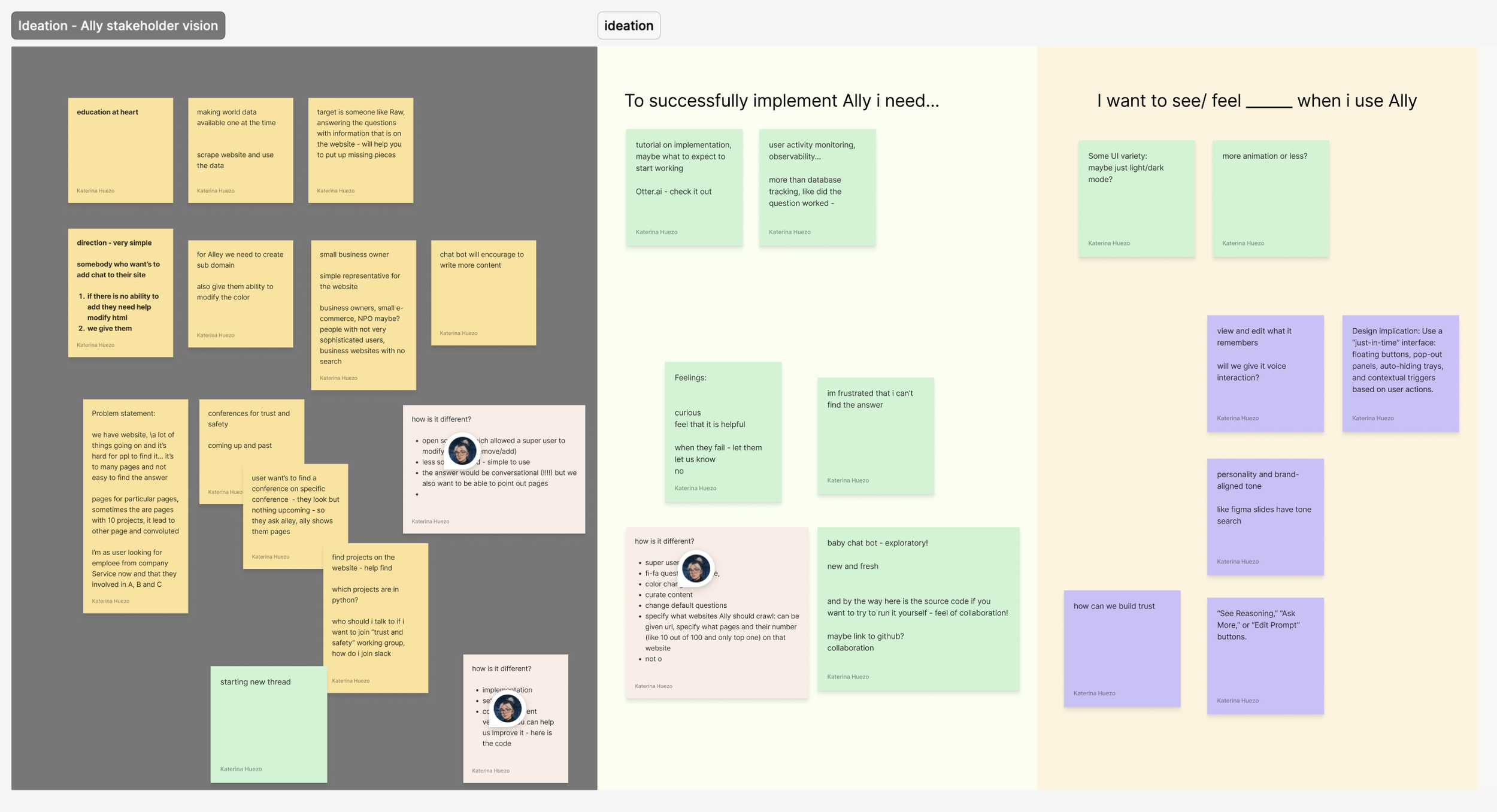

I began with 1:1 interviews and a collaborative ideation workshop to uncover the values driving AlleyCat’s mission.

Methods:

Stakeholder interviews

Vision-mapping workshop

Value-to-user-need translation

Results in Numbers:

3 founder visions consolidated into one UX roadmap

Mapping what success meant to each founder helped define consistent UX principles — transparency, simplicity, and empowerment.

Key insights:

Founders valued education and accessibility (“AI shouldn’t feel locked behind jargon”)

They wanted users to modify, not just consume

Trust and clarity emerged as design anchors

Competitive Landscape

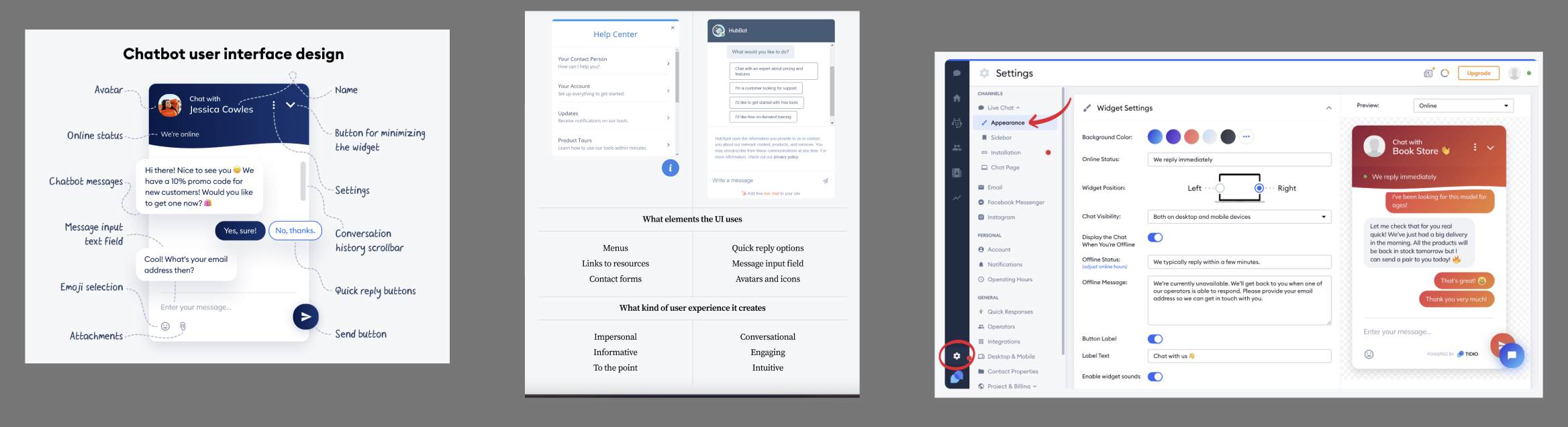

I conducted a comparative audit of existing conversational AI and chatbot interfaces — from Replika and Wysa to Pendo and Tidio — to identify interaction and tone opportunities.

Findings:

Most existing chatbots are either overly playful or too sterile

None clearly explain “how” the AI gets its answers

AlleyCat’s edge: a sense of collaboration and transparency

Defining the Experience

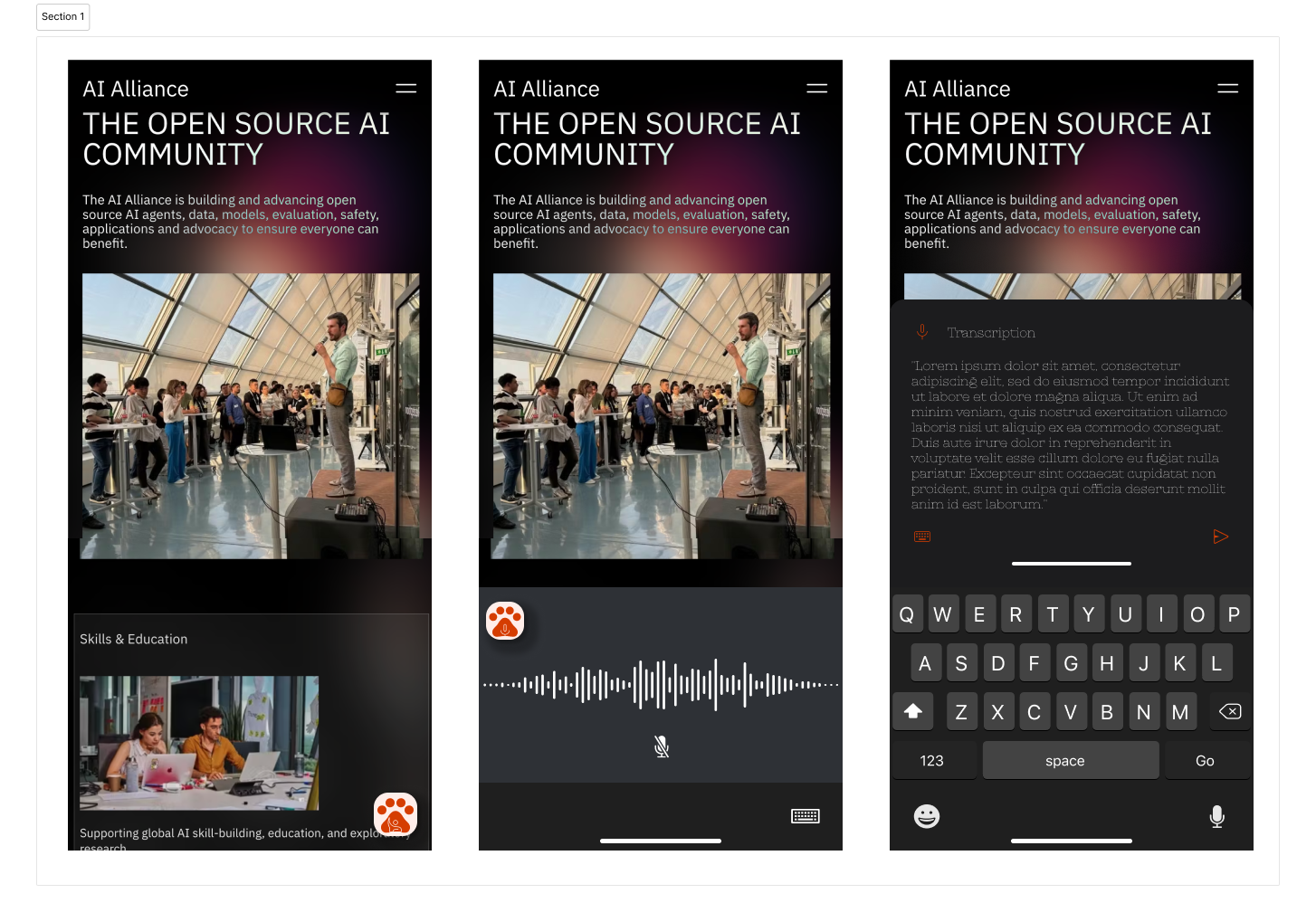

From there, I mapped a visitor user flow that focused on natural discovery, multi-modal interaction (voice + text), and user control.

Design Principles:

Transparency: Show users where data comes from

Empowerment: Allow editing and correction before sending

Playful seriousness: Friendly enough to invite exploration, smart enough to earn trust

Visitors can explore through voice or text, edit transcriptions, and see how the AI retrieves context — building trust through visibility

Result:

Ready-to-ship design system used in subsequent funding deck

UI Design & Interaction

To make the experience approachable and emotionally engaging, I designed a friendly visual system that balances trust, playfulness, and clarity — three traits that emerged from our stakeholder sessions.

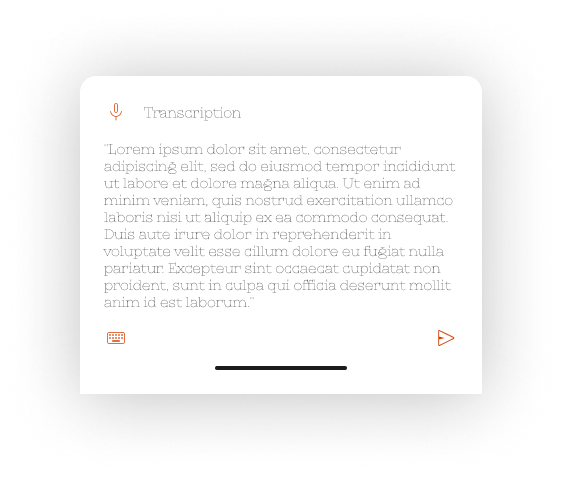

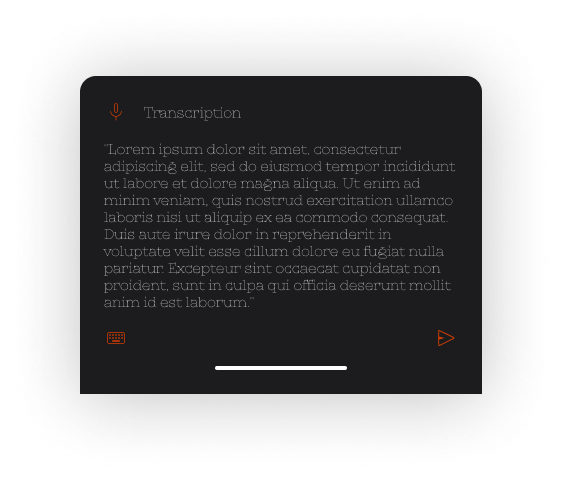

Light & Dark Modes

Because most users would interact with AlleyCat on their phones, the interface needed to be adaptive and comfortable for long sessions.

Both modes use warm neutrals and orange accents to convey energy without strain — a subtle nod to AlleyCat’s brand personality: helpful, clever, and curious.

Icon as Companion

The interactive paw-print icon evolved into more than just a logo — it became Ally, the user’s small AI helper.

Each animation step (listening, thinking, replying) subtly reinforces that there’s intelligence and care behind every response.

This micro-interaction design served two goals:

Gamify trust-building: turning technical latency into a delightful signal of progress

Create emotional continuity: users instantly recognize the paw motion as Ally “working on it”

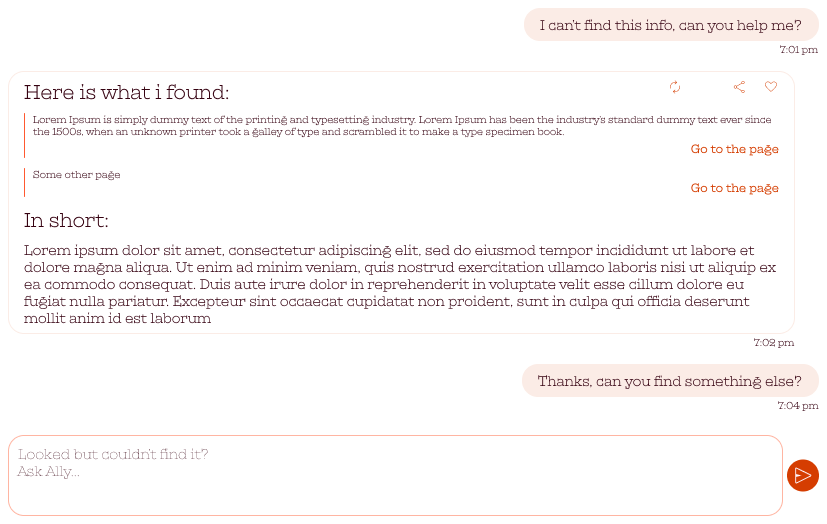

Conversation Flow

The chat UI was intentionally minimalist — large typography, clear hierarchy, and focus on readability.

Message panels display both search snippets and LLM summaries side by side, helping users verify information.

The transcription module allows users to review and edit their voice input before submission — giving control without breaking flow.

Gentle animations between states (listening → transcribing → responding) make the interface feel alive rather than mechanical.

Result:

2-week sprint from workshop to prototype

Outcomes

The final prototype blended conversational UX with explainability and tone — transforming a backend-heavy system into a warm, mobile-first companion for everyday knowledge retrieval.

By the end of the engagement, AlleyCat AI Works had:

A unified stakeholder vision and product narrative

Defined UX principles and early interaction patterns

User flow blueprint ready for prototyping

Next Steps: Client Onboarding UX

With AlleyCat’s conversational interface defined, the next design focus is client onboarding — guiding organizations to set up and deploy AlleyCat on their own websites.

The goal: make implementation accessible to non-technical teams.

Future UX and UI work will center on:

A guided setup flow that walks users through data-source connection, scraping preferences, and brand customization

No-code integration tools with clear visuals and instant feedback

Contextual help from Ally itself — the assistant explaining its own setup steps

Emphasis on confidence and transparency, so users feel empowered rather than intimidated by AI terminology

The challenge is to design a system that’s technically sophisticated under the hood, yet feels effortless and even fun to configure. This next phase continues the same design philosophy: turning complexity into clarity, and technology into trust.