NASI: un-cringing social media experience

NASI: un-cringing social media experience

Case Study: NASI - New Age Social Instrument

From a simple social scraper to a vibe companion that makes social media fun again.

Project Overview

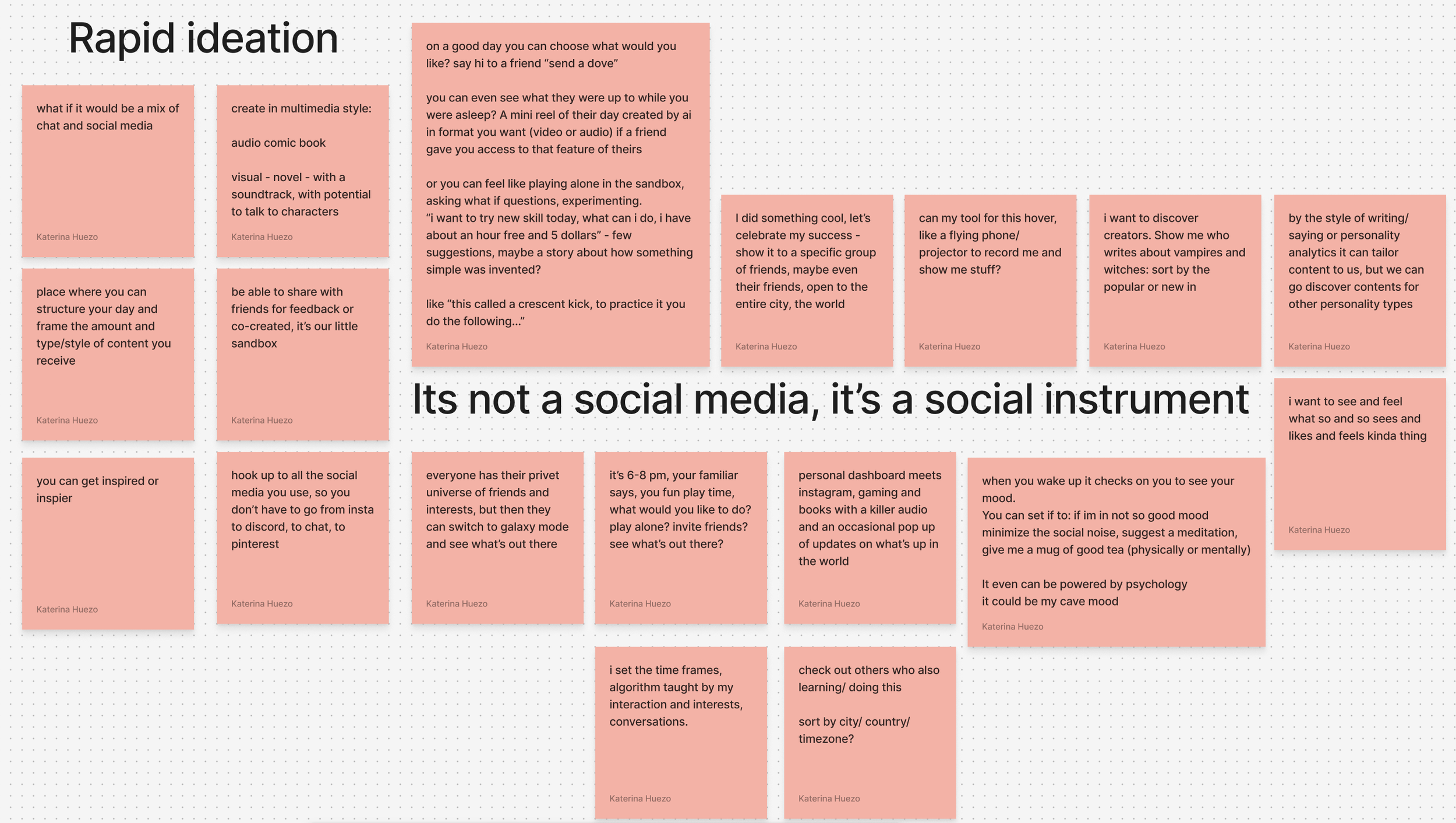

NASI began with a simple question:

What if AI could learn to recognize human emotion and curate content accordingly?

That curiosity turned into a technical deep dive — mapping what it would actually take to build such a system and what form it should take. Early on, I realized NASI needed to start as a crawler, collecting posts across a user’s social media accounts. Then, an analytical module would process the data, categorizing it into topics based on either user-defined interests (travel, cooking, fashion) or onboarding filters.

From there, the challenge was to turn all that analysis into something beautiful and intuitive.

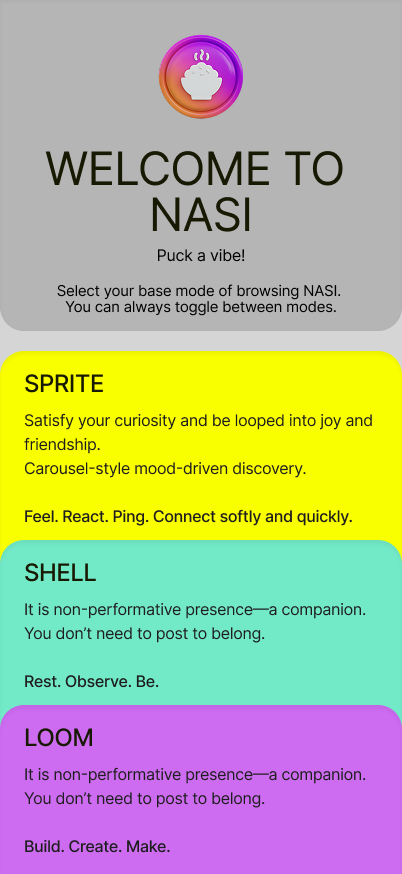

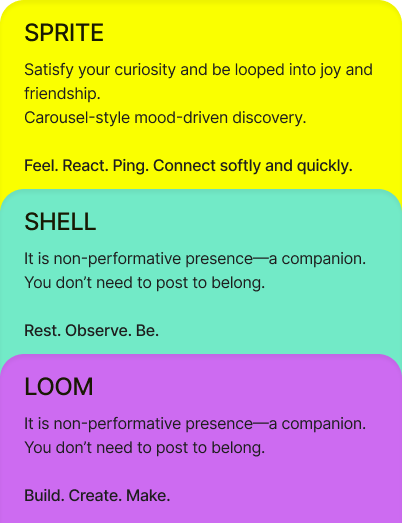

That’s where Sprite and Shell came in:

Sprite, a dashboard-style interface for exploration and insight.

Shell, an infinite-scroll mode for immersive, emotional browsing

The Challenge

Social media hasn’t evolved or changed in almost 10 years— only expanded. The interfaces are efficient, but the experience is empty, there is very little of joyful interactions, it’s dull and algorithm designed to trap user in doomscrolling. Creators chase algorithms. Users chase validation. Everyone ends up exhausted.

Our interviews (about 50 users and 5 creators) revealed the same pattern:

35 % time lost to reposting

31 % creative burnout

23 % missed reach

17 % algorithm chaos

The core question shifted: Can AI bring joy, presence, and emotional awareness back into online interaction?

Discovery & Insights

What began as UX research for a posting tool turned into a study of digital mood. Over 100 users and 15 creators shared stories of fatigue and detachment — but also curiosity about AI as a mirror, not a manager.

“I don’t need another platform. I need something that understands what content I want to see today, so i can feel like a part of actual community.”

Cultural shift: people want intimacy and community, not performance.

Technological shift: multimodal, memory-based AI now makes that possible.

The discovery reframed NASI’s mission — from optimizing content flow to re-humanizing the digital one.

Strategy & Approach

We designed NASI as an operating system for social life online with emotional appeal.

Three interconnected systems make it possible:

Memory System: Remembers what you’ve interacted with, how it made you feel, and the patterns that define your rituals.

Mood Engine (modular backend): Blends explicit (user-chosen), passive (scroll behavior), and inferred (language tone) inputs to modulate feed intensity and pacing.

Persona Layer: User can curate their personal experience by filtering and prioritizing certain types of content i subscribed to.

The result: interaction becomes the interface. NASI doesn’t wait for prompts — it feels your rhythm and flows with you.

Design Direction

Each NASI mode translates emotion into interface:

Loom, Creator Mode

A calm, structured workspace to create once and publish everywhere. Option to brows inspirational content laser focused on specific topic. Captions, hashtags, and tone adapt automatically.

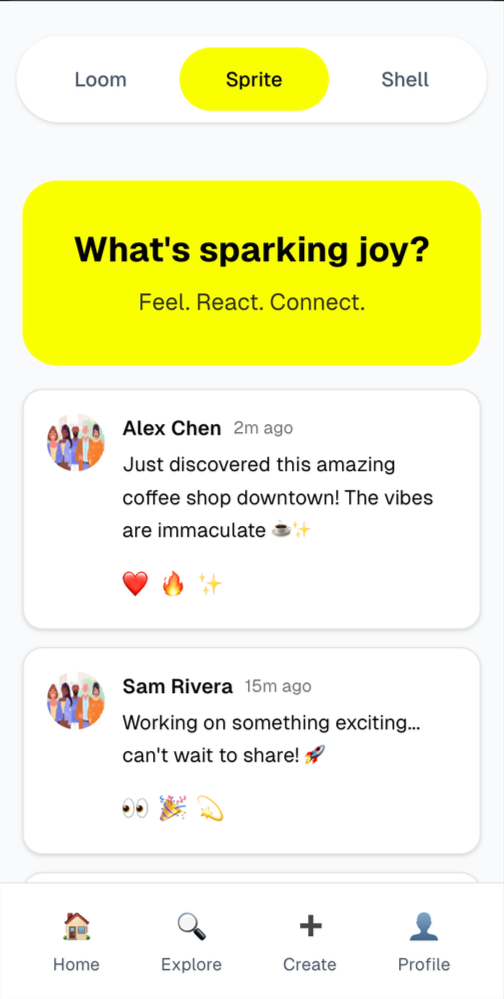

Sprite, Discovery Mode

A playful, fast-moving feed for curiosity and serendipity — powered by the modular backend, content architecture and categorization.

Shell, Ambient Mode

Minimal, atmospheric browsing for reflective days. Low stimulation, high connection.

Across all modes, subtle color, tempo, and animation shifts express mood — turning what used to be scrolling into surfing with feeling.

Outcome / Next Steps

Prototype testing validated the emotional thesis:

87 % of participants would try NASI immediately

23 % expressed trust concerns, leading to a transparent data-ethics framework

50 + early testers, 15 active creators already in waitlist community

Roadmap

Q4 2025 – Prototype Testing + MVP polish

Q1 2026 – Creator Onboarding Pilot

Q2 2026 – MVP Launch (“trend-girl summer”)

Business Model

Free creator tier — Tiered subscriptions — Brand deal revenue share — Future premium agent skins & analytics API.

Core Components

From Dev Perspective

-

Episodic memory (last interaction, emotional tags)

Long-term associative memory (patterns over time, rituals)

Storage of emotional states and user reactions to content

-

Mood inputs: explicit (user-selected), passive (biometrics, scroll behavior), inferred (language/tone)

Mood → behavioral shifts in agent tone, speed, content recs

State machine or reinforcement loop for emotional state modulation

-

Modular personality templates (Witchy Archivist, Chaos Goblin, etc.)

Adjustable voice/tone settings: language model persona layering

Custom emotional thresholds per agent archetype (e.g. Muse reacts to grief, Strategist to burnout)

-

Agents tag, filter, and serve scrolls based on:

Current mood

Emotional history

Intent (“Do I need comfort, chaos, clarity?”)

Think: personal DJ for your emotional landscape

-

LLM wrapper + persona conditioning

Fine-tuned embeddings per user-agent relationship

Vector database for emotional memory

Middleware for emotion detection & modulation

Modular UI layer for agent visual/tone reactions (animation, text bubble style, ambient changes)

Reflection

NASI redefined how I think about “user experience.” It’s not about faster interfaces — it’s about felt interaction. By putting emotion and curiosity at the core, AI stops being a productivity tool and becomes a creative companion. From scraper to vibe engine — NASI reminds us that digital connection can feel human again.